Founders Insight

Fenil Suchak

Dec 6, 2025

Why We Only Do Personalized Demos

We stopped doing broad demos because showing everything at once looks impressive but rarely converts. Prospects connect with only a fraction, and the rest dilutes value. So we rebuilt the process:

• Every demo shows their end state after setup

• Their vertical, GTM motion, and product language

• Their customer lookalikes modeled inside OpenFunnel

The call shifts from a pitch to a jam session - “wait, how did you figure this out?”

And with LLMs plus our internal abstractions, generating a fully personalized demo takes under twenty minutes.

Fenil Suchak

Dec 4, 2025

The 48-Hour Post-Event Window

Most teams lose momentum after Re:Invent, but the 48-hour window after everyone flies home converts the highest.

People are energized, inboxes aren’t crowded, and conversations are still fresh.

• Send a follow-up with a photo - nobody ignores their own face.

• Reference something specific you discussed.

• Ask a small question before emailing; it boosts opens.

Waiting a week turns warm connections cold. Follow up while it still matters.

Aditya Lahiri

Dec 1, 2025

Who Inside an Account Actually Cares

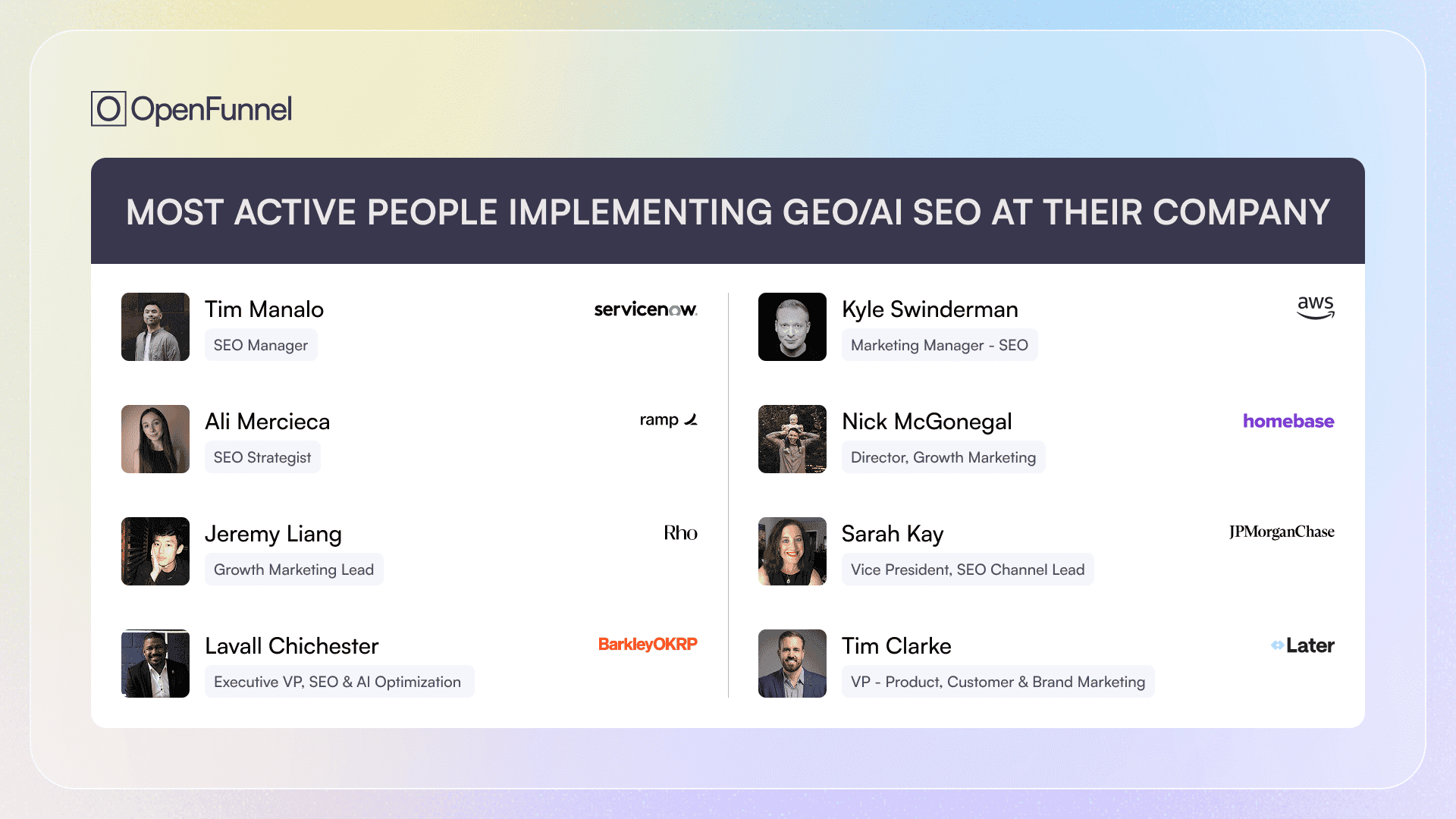

AI is creating new functions faster than LinkedIn titles can update, making it harder to identify the true ICP inside an account. Reasoning LLMs changed this.

They interpret interaction patterns at scale:

• what people engage with

• who they follow

• which competitors they react to

From these signals, interaction clusters reveal who is actually leaning into GEO, AEO, or any emerging function. When an account shows GEO activity, LLMs surface the real operators long before their titles change, exposing who understands and drives new functions across large organizations.

Fenil Suchak

Nov 28, 2025

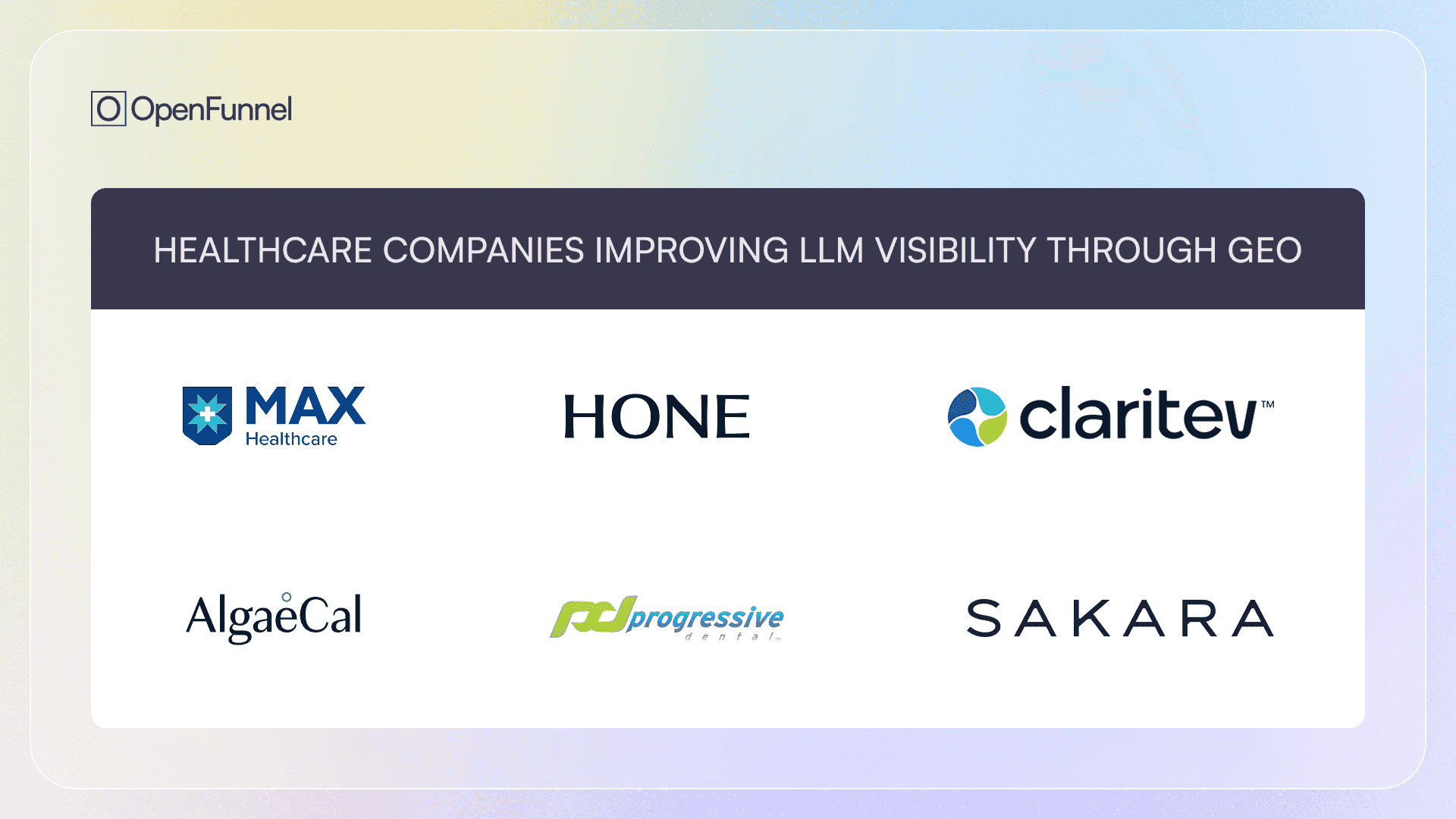

LLMs as Healthcare’s New Referral Layer

LLMs are becoming a referral layer in healthcare as organizations invest in GEO to ensure AI understands specialties and answers intent-driven queries.

• Max Healthcare: structured clinical data for accurate department mapping.

• Hone Health: LLM-friendly diagnostic content.

• Claritev: clearer taxonomies for symptom-to-service alignment.

• AlgaeCal: evidence-based bone-health content.

• Progressive Dental: optimized specialty pages.

• Sakara Life: refined wellness program and ingredient data.

Healthcare GEO ensures models surface the right care for the right conditions every time. Fast prototypes cut alignment time and speed up production.

Aditya Lahiri

Nov 27, 2025

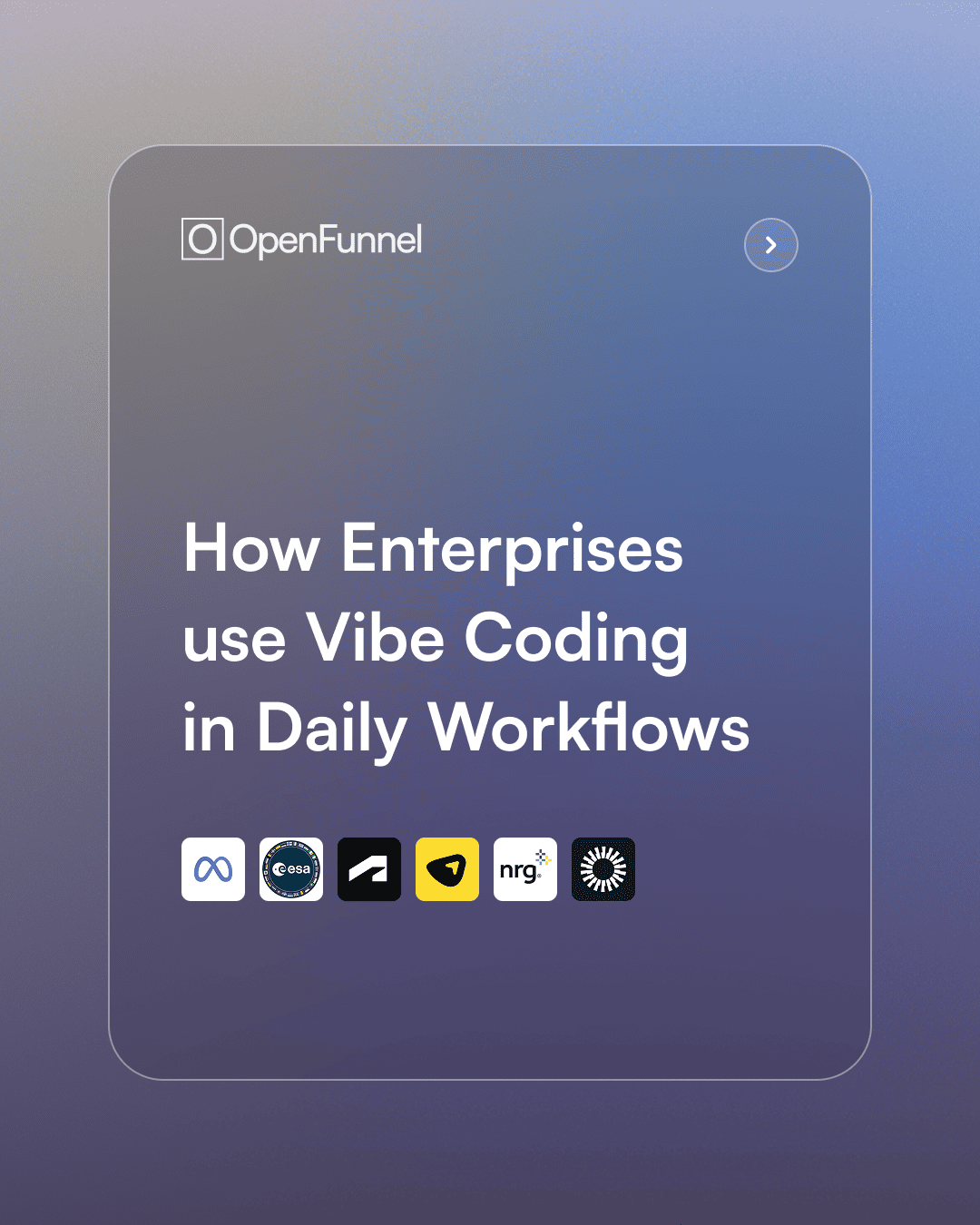

Vibe Coding Inside the Enterprise

“You can’t vibe-code in an enterprise.”

The data disagrees.

Meta: AI PMs building internal tools and agentic automations.

ESA: teams creating rapid prototypes and data-viz mocks.

Autodesk: engineers now required to have vibe-coding experience.

Uplers: backend roles labeled “vibe-coding first.”

NRG Energy: AI teams accelerating infra and multi-agent workflows.

Okta: DevRel and product marketing using v0, Netlify, and Lovable.

Fenil Suchak

Nov 25, 2025

GTM Teams in Founder Mode

GTM and product teams are shifting into founder mode and using vibe-coding tools to prototype without waiting on engineering.

Examples:

Keepme.ai — demand gen shipping landing pages with Lovable

Blink.new — marketers testing acquisition funnels

Scale AI — building demos for client pitches

HubSpot — designers shipping POCs

Razorpay — AI PMs creating internal tools

Eulerity & InOrbit — marketers wiring automations

Fast prototypes cut alignment time and speed up production.

Aditya Lahiri

Nov 24, 2025

Thought Leadership for GEO Visibility

Thought leadership is shifting to AI distribution. Companies are redesigning roles so expertise appears accurately in generative search.

Teams now work to ensure LLMs surface correct narratives across domains:

• PartnerCentric: affiliate marketing

• Giftogram: gifting workflows

• Forter: fraud prevention

• Meltwater: media intelligence

• Versapay: AR ops

• TEKsystems: expert content

• Dykema: legal clarity

• DTN: weather and energy intelligence

Authority now depends on how well AI describes you, and this shift is redefining how expertise is found.

Fenil Suchak

Nov 21, 2025

Why Founder-Built Products Win Everywhere

We spend weekend watch parties reviewing PostHog session replays.

Watching a user glide through the happy path is great, but seeing them drop off at the final step tells the real story.

These moments reveal whether we designed from our own assumptions or captured their instinct.

We take notes, look for friction, and often turn a single replay into a roadmap update because it shows exactly where intent breaks and what users actually expect.

Fenil Suchak

Nov 20, 2025

Saturday Night Watch Parties

We spend weekend watch parties reviewing PostHog session replays.

Watching a user glide through the happy path is great, but seeing them drop off at the final step tells the real story.

These moments reveal whether we designed from our own assumptions or captured their instinct.

We take notes, look for friction, and often turn a single replay into a roadmap update because it shows exactly where intent breaks and what users actually expect.

Fenil Suchak

Nov 18, 2025

Why Personalized GTM Databases Matter

Personalized databases work because every product has its own context and triggers.

We build real-time GTM databases from live activity across your entire TAM, surfacing new companies the moment your indicators appear and identifying the right people based on how they engage in the ecosystem.

In AI-SEO, that means spotting teams building GEO or LLM SEO functions and the people interacting with leaders.

Off-the-shelf tools miss this with small static lists and broad, commoditized signals.

Fenil Suchak

Nov 11, 2025

What Is a Personalized GTM Database?

Most "GTM databases" are just contact directories flexing "50M companies"

→ but how many are actually active?

Why account tiering matters:

Horizontal SaaS serves everyone. You need to prioritize based on timing, activity, and traits - not surface filters.

The problem:

Techno-graphics work for qualification, not timing. They don't show what's changing, why it matters, or who's involved.

Static databases → wasted credits on inactive accounts.

Companies move in time.

Last month's non-priority could be Tier 1 today (like adopting usage-based billing).

Aditya Lahiri

Oct 29, 2025

Is the Data Layer for Agent Consumption Just Text-to-SQL?

Agents don't think in filters - they think in meaning.

They combine world knowledge with user context to craft semantic queries based on intent, not keywords.

The problem: The industry is stuck translating natural language into pre-set SQL filters. Rigid schemas. Static filters.

Our agent-native data layer: Vector embeddings + semantic matching for company activities:

What functions are they building?

What migrations are happening?

Who's joining and leaving? Static filters for deterministic data:

Headcount, location, funding stage The bet: Agents are smart enough to query both static and semantic fields. We're building for Agents that reason around meaning.

Fenil Suchak

Oct 22, 2025

What Does GTM Data Layer 2.0 Look Like for Humans and GTM Agents?

We're studying GTM timing, movements, and real-time signals that reveal pain-points.

One thing became obvious: People and Company APIs are still CRUD operations.

They weren't built for GTM search or insight discovery.

Getting to meaningful insights is slow, inefficient, and fragmented.

The shift: Many are refactoring code-bases to make them agent-ready. The same shift is coming to GTM.

A data layer for agentic search looks nothing like CRUD APIs.

Converting natural language to API params repeatedly is wildly ineffective. We're just masking text-to-SQL as search.

Aditya Lahiri

Oct 15, 2025

How Are Fast, Cheap Open Source Models Changing How We Build?

Inference providers like Groq unlock two shifts:

LLM-native architecture We replaced deterministic code with LLMs from day 0.

Example: Natural language blocklists. Instead of hardcoded lists, we use Groq + web search in real-time. "Exclude marketing agencies" just works.

Rapid prototyping with model swaps

OSS models for intermediate reasoning

SOTA models for complex reasoning

v1 fast > perfection. Optimize later based on usage.

The insight: Orchestrate a hierarchy of models - cheap/fast for most flows, expensive/smart only when needed.

Fenil Suchak

Oct 12, 2025

Are Speed and Insight the Only Moats in GTM?

With competitors appearing weekly, timely and precise outreach wins.

If you're using static filters - even technographics - you're too late or off on timing.

Funding signals are commoditized. Everyone has them, reacts, and it's signal slop.

You need creative timing and insight - leading indicators of pain with nuance.

Great GTM teams spot live, nuanced movements:

Job posts revealing plans

Website/pricing changes

Hyper-specific layoffs

Sub-departments static too long

Find leading indicators of pain.

That's where timing wins and GTM teams succeed.

Aditya Lahiri

May 12, 2025

What Are the Dumb Ways to Die as an AI Startup in 2025?

Assume models won't get better Building your moat around "GPT-5 can't do X yet" is a death sentence.

Your defensibility must be orthogonal to model capabilities.

Treat evals as performative Without rigorous evaluation frameworks, you're flying blind. Evals are your early warning system.

Vibe code production features Ship fast, not recklessly. In AI products, trust compounds slowly and evaporates instantly.

Skip in-person at SF The density of AI talent, customers, and capital in SF is unmatched. Serendipity still matters.

Never build a data moat Proprietary data and feedback loops are real differentiators.

Treat non-AI infrastructure as secondary Brilliant LLM + broken integrations = customers leave.

Which mistake do you see most often?